Riverside Research GEM Interns Contribute to Developments in Human-Machine Teaming by Programming Robots to Discern Nonverbal Human Communication

By recognizing nonverbal actions, such as gestures and body language from humans, robots will be able to contribute to vital, life-saving tasks, such as understanding the needs of those unable to speak. Riverside Research is currently addressing this need by encouraging hands-on learning for the next generation of artificial intelligence/machine learning (AI/ML) specialists.

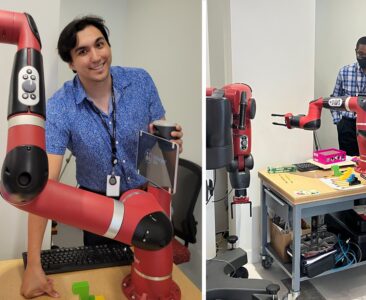

To teach robots to recognize such nonverbal actions, three of our interns in the Riverside Research Lexington office started out by programming robots to learn a collaborative tower-building game. They taught robots with different skill sets to work with humans to achieve a shared goal.

The game that the robots are learning is called TEAM3. It’s played in groups of three, with each player taking on one of three roles: the Architect, who knows what tower the team must build and can’t speak; the Builder, who builds the tower and can’t see; and the Supervisor, who facilitates communication between the two. In developing the artificial intelligence (AI) capabilities necessary for robots to play this game with humans, robots will be able to determine the needs of humans by not only what they say, but how they act.

But what our interns’ work could achieve is far more important than winning a game.

About Riverside Research’s Contributions to Human-Machine Teaming Development

Consider the application of this technology in the medical field. Patients with aphasia (those who have trouble finding and articulating words), dementia, and autism could benefit from AI to interpret and analyze their gestures. Civilians and warfighters affected by trauma in conflict zones could also benefit.

In addition to humanitarian advancements, this technology could embody a more nuanced role as part of intelligence gathering, analyzing video footage to help analysts determine a subject’s mood, state of mind, and intentions based upon body language as part of a threat assessment or profiling process. With the addition of technology meant to interpret nonverbal communication, analysts can present a more complete picture for strategic intelligence.

The Role of Gamification in Machine Development

The game the interns programmed the robots to perform involved providing the robots with the ability to understand verbal commands, the first step towards educating the robots to understand nonverbal instructions. The robots then interpreted these commands and did their best to complete the tasks the humans intended. The interns then used the game to train and develop the robots, helping them learn language. As part of the game, the robots interpret the nonverbal communication, then translate that into verbal language, which they then provide as guesses of the person’s intended goal.

The interns emphasized two-way communication with the robots and completed the project in Riverside Research’s Machine Learning Lab, which is part of Riverside Research’s Open Innovation Center (OIC).

This method of teaching the robot is also significant because of this gamified method of teaching. While humans can learn from a classroom and one-on-one instruction, research shows that learning through participatory experiences allows us to more deeply internalize the lessons.

About the GEM Interns

The GEM program recruits high quality, underrepresented students looking to pursue master’s and doctoral degrees in applied science and engineering and matches their specific skills to the technical needs of GEM employer partners. Riverside Research has been a six-year participant in the GEM program.

“We’re so fortunate to continually have an incredible set of interns each summer,” said Dr. Joey Salisbury, who oversaw the interns’ work. “We’ve worked hard to establish a collaborative framework that allows for the open exchange of ideas from every level. Our interns work on meaningful and interesting projects that contribute to the mission of the business. Many thanks to Michael J. Munge, Bradon Thymes, and Lylybell Teran for their outstanding work on this project.”